While Artificial Intelligence is still a long way from giving us the sentient personal assistants we were promised, information professionals are applying AI to improve their work.

Introduction

Over the last six decades, Artificial Intelligence (AI) has delivered very little of what it has promised and frequently requires human intervention in its practical application. With the recent buzz around OpenAI’s ChatGPT, I thought it would be a good time to discuss how AI — specifically machine learning — is being used to manage digital assets. In this first installment in a series about different types of machine learning, I will focus on Natural Language Processing (NLP), a type of machine learning typically used for text analysis, including topic modeling, classification, text prediction (i.e., auto-fill or type-ahead), speech-to-text transcribing, and ‘reading’ handwritten notes. I hope you’ll read on to learn more.

- Here’s what to expect in this post

- Why NLP matters to me

- What is NLP

- How NLP works

- How NLP is being used

- What we can do with NLP

- Benefits to NLP

- Drawbacks-to-NLP

Why I Find it Worth the Effort

As a graduate student and in the various jobs I’ve held since, I’ve wanted to read and understand text faster:

- To skim large quantities of newspapers, find advertisements and pull the names of theaters, actors, and performance names for The London Stage Project

- To auto-classify recipe and food images at America’s Test Kitchen

- To index and summarize vast collections of data so that I can more quickly build controlled vocabularies for my clients

Natural Language Processing — alongside open-source code libraries — makes all of this possible with a minimal amount of skill and effort.

What is Natural Language Processing

A subfield of linguistics, computer science, and artificial intelligence, Natural Language Processing (NLP) allows computers to process, analyze, and find ‘meaning’ in large textual and spoken word data sets. Whether we know it or not, we rely on NLP in our daily lives: search engines, speech to text, machine translation, spam filtering are services that rely on it.

- See how it works:

- In a local database, search for “what is love?”

- Using Google in a new browser window, type in the same question.

- The search engine understands our question: that’s NLP in action!

How is does it work?

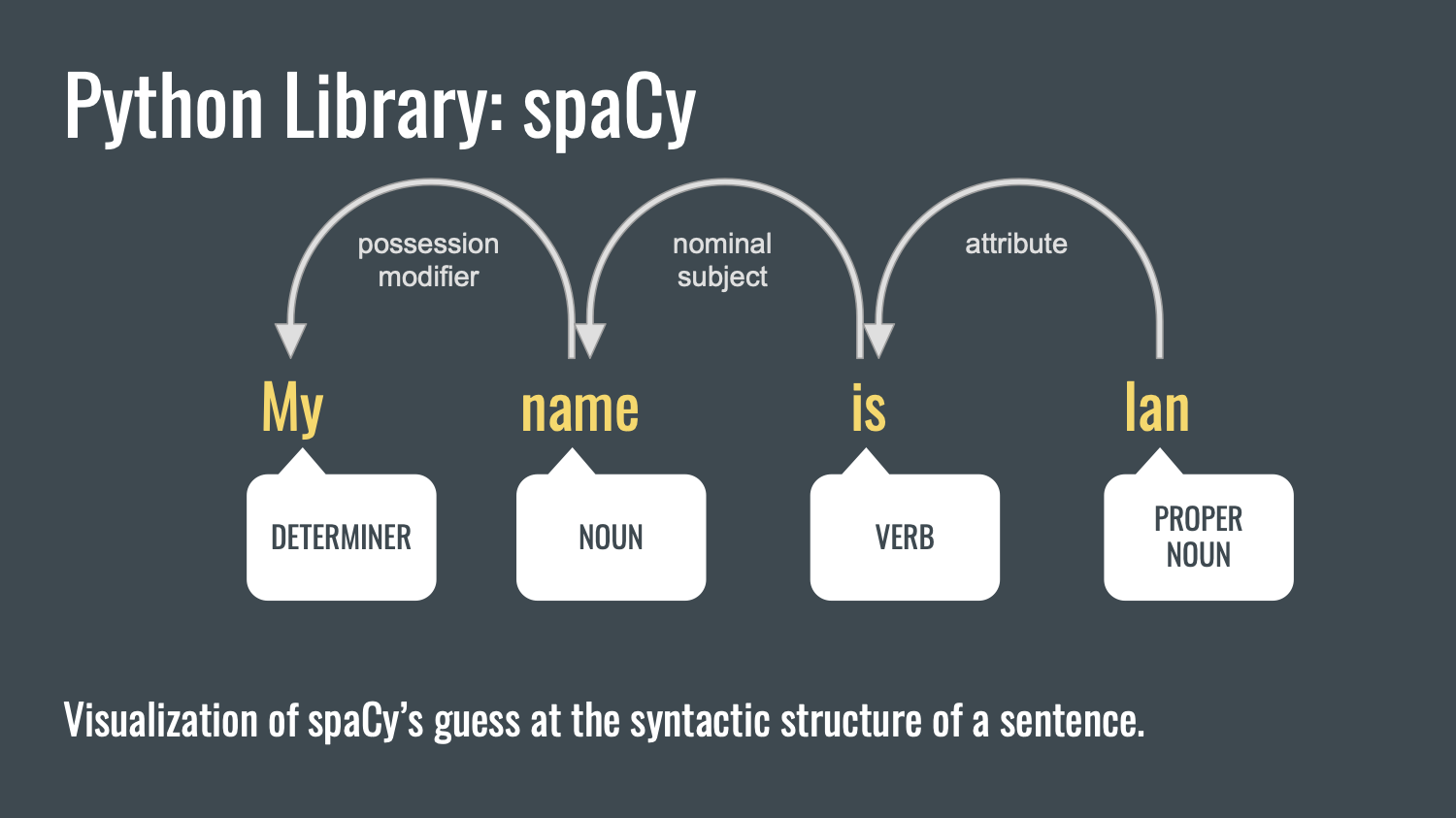

Language has a structure that can be broken down into different parts. When we were in primary school, we identified nouns, pronouns, adjectives, verbs, adverbs, prepositions, conjunctions, etc. NLP does this at scale and with only a few lines of code. Using named entity recognition (NER) in the example above, "Ian" is recognized as a person’s name. Imagine how quickly you could generate a name authority list if you could extract names from a collection of thousands of PDFs!

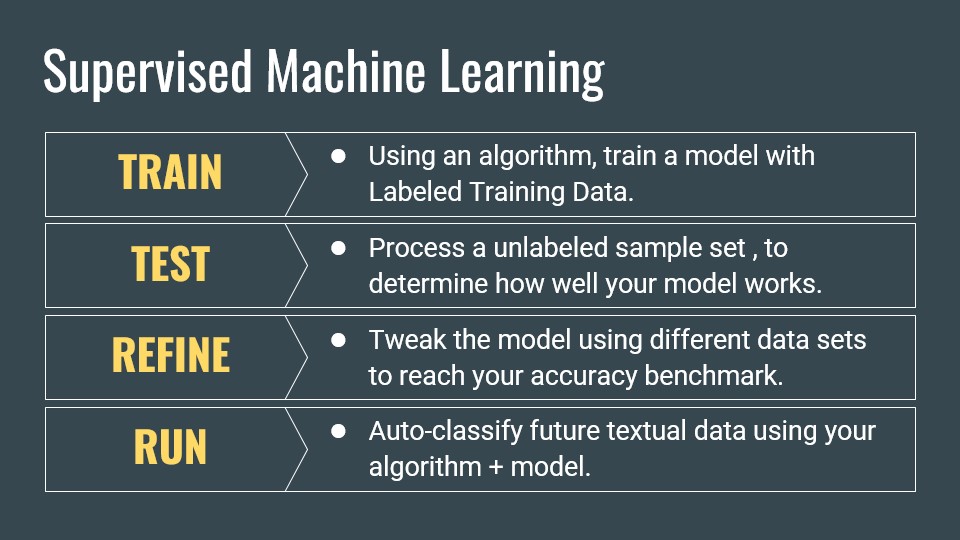

Taking this method to the next level, we arrive at auto-classification, a type of supervised machine learning.

- Using an NLP algorithm, train a model with text and its classification (labeled training data)

- Process a smaller sample set (testing data), to determine how well your model works

- Tweak the model using different data sets to reach your accuracy benchmark

- The finished algorithm + model can be used to auto-classify future textual data

- But beware of false positives. More on this later.

How NLP is being used

NLP is the foundation of many chatbots, including ChatGPT. While these these virtual helpers are good at answering simple (programmable) questions 24/7, they are also pretty bad at understanding complex ones. Just ask ChatGPT "A cow died. When will it be alive again?" Instead of responding that this is an impossible question to answer, it will try to calculate how long it takes for new cow to be born. Goofy! In such circumstances, Chatbots would best refer complicated questions to a live — thinking — person.

Companies and institutions use NLP to address legacy metadata issues and to provide access to their collections. UC Riverside Library used NLP to identify women referred to by their spouses name and replaced these with their given or birth names. Virginia Tech University Libraries was recently awarded a grant to advance discovery, use, and reuse of items from their collection by allowing students to mine text of books and documents. Constellate, a service offered by JSTOR’s parent organization, provides their users with a text analytics service to mine text of archival repositories of scholarly and primary source content. The Allegheny County Department of Human Services uses NLP to help make case note data more accessible and digestible – saving case workers time and effort while enabling better informed decisions. In my work, I use NLP to extract terms and synonyms for client controlled vocabularies. I use NLP to identify keywords during cataloging, auto-classify assets based on extracted metadata, develop chatbots to answer user questions, and to improve autocorrect and autocomplete functionality in user interfaces.

Benefits of NLP

There is a wide range of useful applications of Natural Language Processing. NLP excels at processing raw unstructured data. This makes NLP great at analyzing big data. Code libraries are continually being updated and improved. Just look at SpaCy and NLTK for examples. As mentioned above, NLP is great at classify documents, but can also ‘read’ handwritten text. This could be a game changer if you’re an archivist in charge of a collection that includes handwritten notes. NLP is adept at speech recognition and is frequently leveraged by DAM vendors to transcribe videos. For smaller jobs, you can run NLP programs on your desktop machine, making it a tool accessible for many of us.

Drawbacks of NLP

Because it is an incipient technology, Natural Language Processing has several drawbacks. If you’re building a solution from scratch, NLP requires training, testing, refining, and more training. The algorithm’s decision making process is usually hidden from users; essentially a black box. The models can oft-times be difficult to customize, involving processing new sets of training data. The algorithms and the data on which they are based reflect the biases and values of the people who developed them. ChatBots lie… err make mistakes… because they lack ‘understanding’ and function without a concept the real world in which we live.

How do you use Natural Language Processing?

Leave a comment