Introduction

DAM professionals design and implement technology, standards, and workflows to support clientele. As part of this process, we must evaluate the results of our work using pre-established criteria. Setting acceptable benchmarks will help us judge whether an endeavor is (or was) successful. For example, comparing the outcome or impact of auto-cataloging before and after it has been deployed can help demonstrate its value. It is therefore important to include an evaluation as part of any DAM project or program.

An evaluation produces quantitative and qualitative data to help us make the right decision. Assessing the success or failure of a service, project or program generally leads to improvements. The results may help reduce costs by highlighting costly services that should be cut. Alternately, it can show where to best allocate resources.

Evaluation is intertwined with project planning. Without an evaluation it would be impossible to determine whether a plan’s goals and objectives have been met. Similarly an initial evaluation is helpful to assess what issues a project plan needs to confront. Services can be shown to support or impede an organization’s goals if they are assessed properly.

Regularly assessing an existing DAM system is critical to the continued success of a digital program. Technology often paves the way for cheaper and more efficient methods of managing digital assets. Why continue spending so much money on a sports car when a workhorse will do? Conversely, that sports car you always wanted may offer you components critical to an emerging technology your company is adopting. Gathering and frequently assessing functional and non-functional requirements will help you make the case for adding customization to your current system. Alternately the results of your evaluation can be used to argue your decision to adopt a new DAM system.

Types of Evaluation

There are two main types of evaluation research: summative and formative. A formative evaluation can be done on an ongoing basis. For example, it may be done during the development or improvement of a DAM system. Summative research measures the efficacy of a system at the end of its launch. Findings are generally included in a final report.

A user-centered evaluation helps researchers objectively guage user satisfaction with a digital asset retrieval system. Without user input, experts often self-diagnose leading to ill-informed decisions. By assessing the overall search/browse/navigation experience, satisfaction with retrieved results, relevance of records, and overall information retrieval experience, administrators will determine what aspects work or need to be improved.

A comparative evaluation between the current and the proposed service or program may help highlight areas in need of development. If your company will allow it, consider sharing snapshots of your DAM user interface during the next local DAM Meetup. Comparing your DAM UI with those of similar businesses will drive improvements to the design of your DAM interface.

Another type of evaluative study that has received much attention of late is cost-benefit analysis. This method, also called Return On Investment (ROI), compares the cost of purchasing or building a service or program to the cost-savings it produces once it has been deployed. Although it is challenging to quantify the value of a service that does not produce products for sale some information professionals have found ways to translate the efficiency brought by their services and programs into a quantifiable values.

Criteria should be setup in advance of an evaluation. These can be created from scratch or adopted from an existing organization. For example, the DAM Foundation’s Ten Core Characteristics of a DAM may be used to determine whether your content management system (CMS) may be considered a true DAM system.

Conclusion

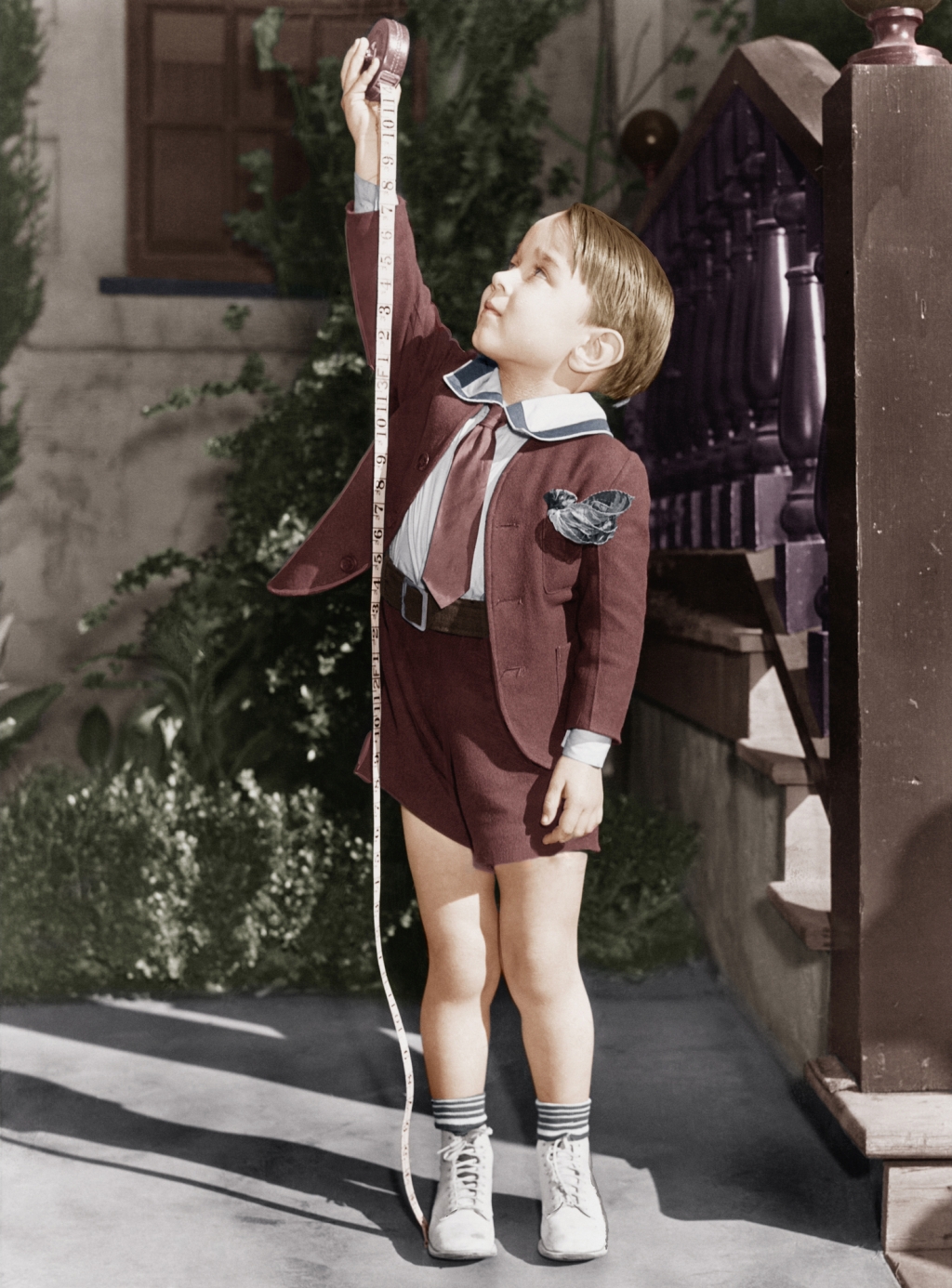

Evaluators should use criteria to assess the extensiveness, efficiency, effectiveness, quality, impact, and usefulness of a DAM system. Criteria are important because they establish a yardstick, which may be used to measure the performance or outcome of a service or program. Developing such criteria with stakeholders during the planning stages of a project or program will ensure you define what is necessary for its success.

How do you evaluate a DAM program?

References

Haycock, K., & Sheldon, B. E. (Eds.). (2008). The portable MLIS: insights from the experts. Westport, Conn: Libraries Unlimited.

Ten Core Characteristics of a DAM. (n.d.). Retrieved from http://damfoundation.org/ten-core-characteristics-of-a-dam/

Leave a comment